At Inspeerity, we believe in creating innovative solutions and sharing our expertise with the community. This led us to start the “BluzBrothers podcast”, originally in Polish, where we discuss various tech topics for developers. One episode – I had the pleasure of hosting – focused on AI’s impact on programmers, sparking great interest.

To make this podcast episode accessible globally, I translated it from Polish to English using free AI tools. Come with me as I break this journey into steps, and see just how mind-blowing AI transcription tools really are!

Choosing the right AI tools and step-by-step process

The first crucial step was selecting the right AI tools for the task. I looked at different platforms known for their contribution to Machine Learning and model sharing. Here is what I found.

HuggingFace, GPTE, and AIToolHunt enable you to easily find the models and tools you can use to solve your challenges. You can filter them by tags, keywords, and recommendations. They also offer you a model hub where you can find and share pre-trained models and a platform for fine-tuning models on custom datasets. So to test or adjust them you don’t even need to have any development environment on your local machine!

*This article was written at the end of September 2023, two months after my translation exercise was done. It is only two months – but with the rapid growth of new tools and AI capabilities, it may be already obsolete.

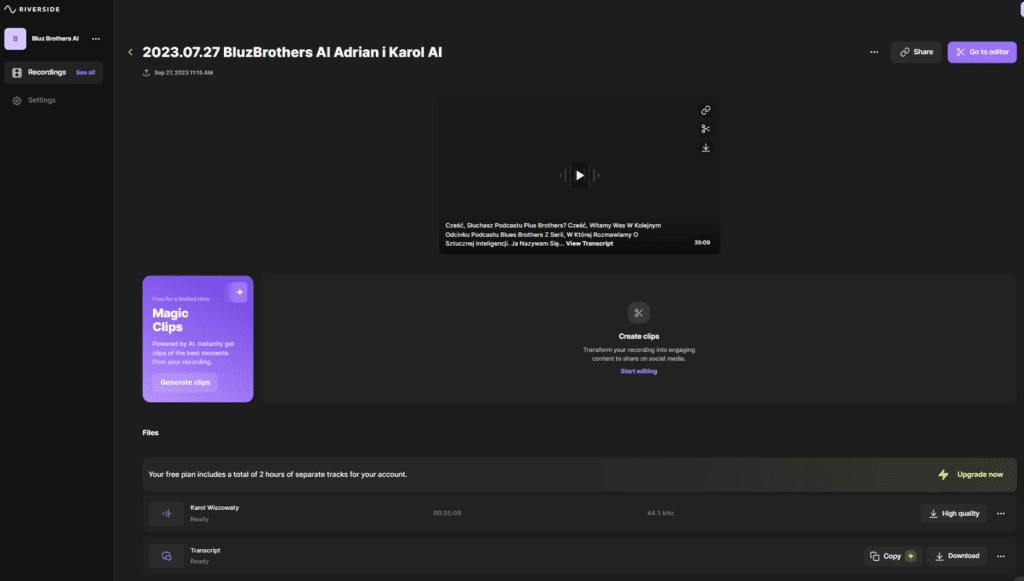

Step 1: Transcription with Riverside

To kickstart the project, I began by searching for AI tools that could effectively transcribe the audio content of our podcast. I specifically looked for “transcription” models. The options were plentiful, including Riverside, Descript, Sonix, AssemblyAI, and many others. After experimenting with several tools, Riverside stood out as a top choice for my needs.

Riverside’s crucial feature was selecting the input language, essential for our Polish podcast, ensuring accurate transcriptions. It also offered efficient export options, like saving as an SRT file or role-splitting, saving me valuable time.

Step 2: Translation with ChatGPT

With the Polish podcast transcribed into text, the next step was to translate it into English. I opted to use ChatGPT for its contextual understanding. By providing ChatGPT with the entire context, I could ensure that the generated translation aligned with the intended meaning, minimizing errors and mistranslations.

Another point that set ChatGPT apart was its versatility. In a single query, I could request translations into any language I desired.

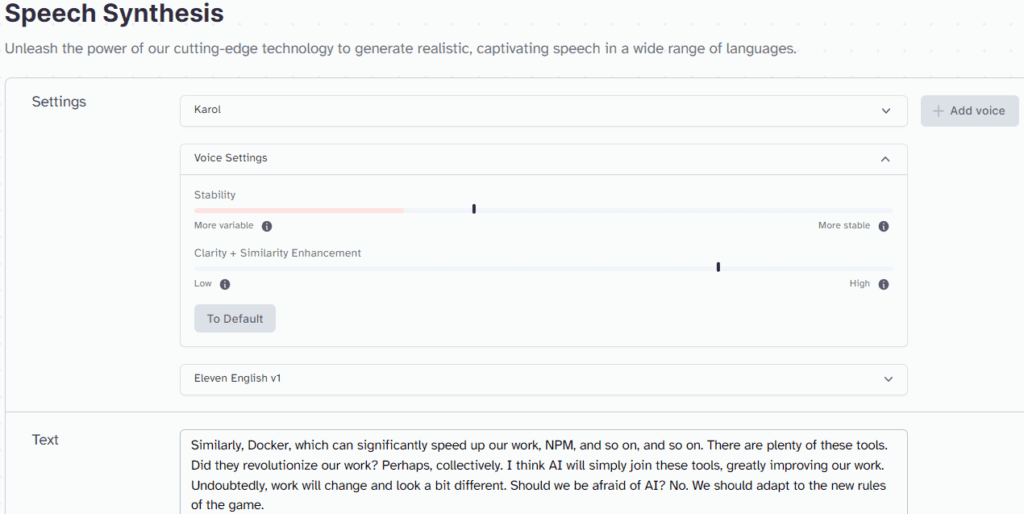

Step 3: Voice recording with ElevenLabs

To give life to the translated script, I needed an AI tool for voice recording. I explored several options, including Descript (again), ElevenLabs, TypecastAI, Synthesys.io, and Listnr. These tools offered an impressive array of voices to choose from, including iconic voices like Clinton’s, Obama’s, Freddie Mercury’s or Mick Jagger’s.

However, in Inspeerity we are proud of doing things by ourselves, in our own way. Therefore I had a unique requirement – I wanted to use our voices: mine and the voice of my colleague Adrian Oleszczak, who co-hosted the podcast with me. This led me to seek a tool that could be trained on our voices for accurate recordings.

Initially, I tried free tools but faced limitations as they didn’t support Polish as input for voice learning, crucial for improving generated voice quality. To address this, I invested $1 in ElevenLabs’ cheapest license, enabling us to train the AI model on our voices and set Polish as the input language.

ElevenLabs provided the flexibility to fine-tune the voices, adjusting parameters for voice stability and clarity, crucial in cases involving background artifacts.

With ElevenLabs, I successfully generated two tracks – one for my voice and another for Adrian.

Step 4: Mixing with Audacity

The final piece of the puzzle was mixing the voice tracks and aligning them with the translated script. For this task, I turned to Audacity, a versatile audio editing tool that I already knew. While this step required a more manual approach, it allowed me to ensure the highest level of synchronization and quality in the final English version of our podcast.

Quality assurance

Throughout the entire process of translating our Polish podcast into English using AI, quality assurance played a pivotal role. As I did it for the first time I wasn’t sure about the results. Therefore this phase was essential in ensuring that the final product not only met high standards but also showcased the true capabilities of current AI technologies.

Step 1: Monitoring

Throughout the journey, I closely monitored the AI’s output, aiming to minimize interference. I intervened only when necessary, correcting glaring mistakes (usually limited to instances where certain words or phrases were unclear even to a native listener). Corrections accounted for less than 0.5% of the script, showcasing the AI’s remarkable accuracy in handling the task.

Step 2: Post-Processing

After generating and recording the English podcast script, it underwent post-processing. This step included cleaning up background noises due to using pre-processed Polish material for training the voices (which shows that AI can easily imitate imperfections in recordings, to make it more natural). If I had used the final podcast version, this step wouldn’t be necessary.

I also manually merged two separate voice tracks to maintain a natural conversation flow between my colleague and me, which was a simple and quick task.

Step 3: Real-World testing

A significant step in quality assurance was real-world testing. I initially tested it with colleagues and peers familiar with our voices. Their feedback was invaluable.

The results were fascinating and reassuring. Testers were genuinely impressed by the work, noting that the AI-generated voices closely resembled ours. While daily interactors noticed subtle differences, some listeners didn’t even realize it was AI-generated.

Did I become a multilanguage podcaster? Let me know!

I would be keen to hear your feedback. Did the AI do a good job? Below are the links to both the Polish and English (AI-powered) versions of the podcasts.

- Polish Language, AI – czy developerzy powinni się bać?

- English Language, AI – Should developers be afraid?

Don’t hesitate to share your impressions and suggestions with me!

Key takeaways

- Reaching Everyone, everywhere. AI isn’t just about cool tech—it’s a game-changer by letting everyone, anywhere, understand what you’re saying. Imagine your content reaching corners of the world you’d never even thought of!

- Continuous AI advancements. AI technology is evolving rapidly, with new tools and capabilities emerging regularly. Content creators can expect an ever-expanding toolkit to simplify tasks, improve quality, and streamline their work processes.

- AI is not perfect… yet. AI is great, but it still needs a bit of a human touch. Who knows, in a bit, we might just be letting AI do its thing without any babysitting 😊

The Future? Instant translations! Picture this: AI-aided earphones and apps that translate everything in real time. Chatting with someone from another country might soon feel like they’re from your hometown.

Used tools & platforms

In my journey, I had the privilege of using some remarkable AI tools and resources. I‘m deeply thankful to the developers and organizations behind these tools for their invaluable contributions to AI-driven content translation. Below, I credit and acknowledge the tools and resources used in this experiment:

- Riverside: this tool provides a stellar transcription tool that enabled me to convert the audio content of our podcast into a text format with precision. Explore Riverside at Riverside.

- ChatGPT by OpenAI: it facilitated the translation of our podcast script into English. The contextual understanding and language capabilities of ChatGPT played a pivotal role in achieving our desired output. Discover ChatGPT at ChatGPT.

- ElevenLabs: this tool enabled me to train AI models on our voices and produce high-quality voice recordings. ElevenLabs played a critical role in making our podcast sound authentic and engaging. Learn more about ElevenLabs at ElevenLabs.

- Audacity: allowed me to mix and refine our podcast audio. Audacity’s versatility proved indispensable in achieving the final polished result. Explore Audacity at Audacity.

- HuggingFace, GPTE, and AIToolHunt: these AI tool aggregators helped me discover and select the right AI tools for my project. These platforms connect the AI community and provide access to a wide array of models, datasets, and applications. Discover HuggingFace at HuggingFace, GPTE at GPTE, and AIToolHunt at AIToolHunt.

- Microsoft JARVIS: while I didn’t use it for this project, I acknowledge Microsoft JARVIS as a noteworthy AI tool. JARVIS promises to streamline content creation and offers exciting possibilities for the future. Explore Microsoft JARVIS at JARVIS.

If you’d like to try translating your recordings or podcasts as well, these tools will certainly make your work easier.

Final thoughts on AI’s performance

In conclusion, my experiment to translate a Polish podcast into English using AI was a resounding success. The AI not only met but often exceeded my expectations, especially when considering that it created content in English, a language it had never heard us speak before. The project highlighted the remarkable capabilities of AI in breaking down language barriers and creating engaging, authentic content.

If you liked this article – stay connected with us on social media to be updated on the latest developments in technology, software development, and AI. And if you’re a developer – make sure to subscribe to our Bluzbrothers podcast!